Designing a Cloud Simulation Engine

The Core Challenge

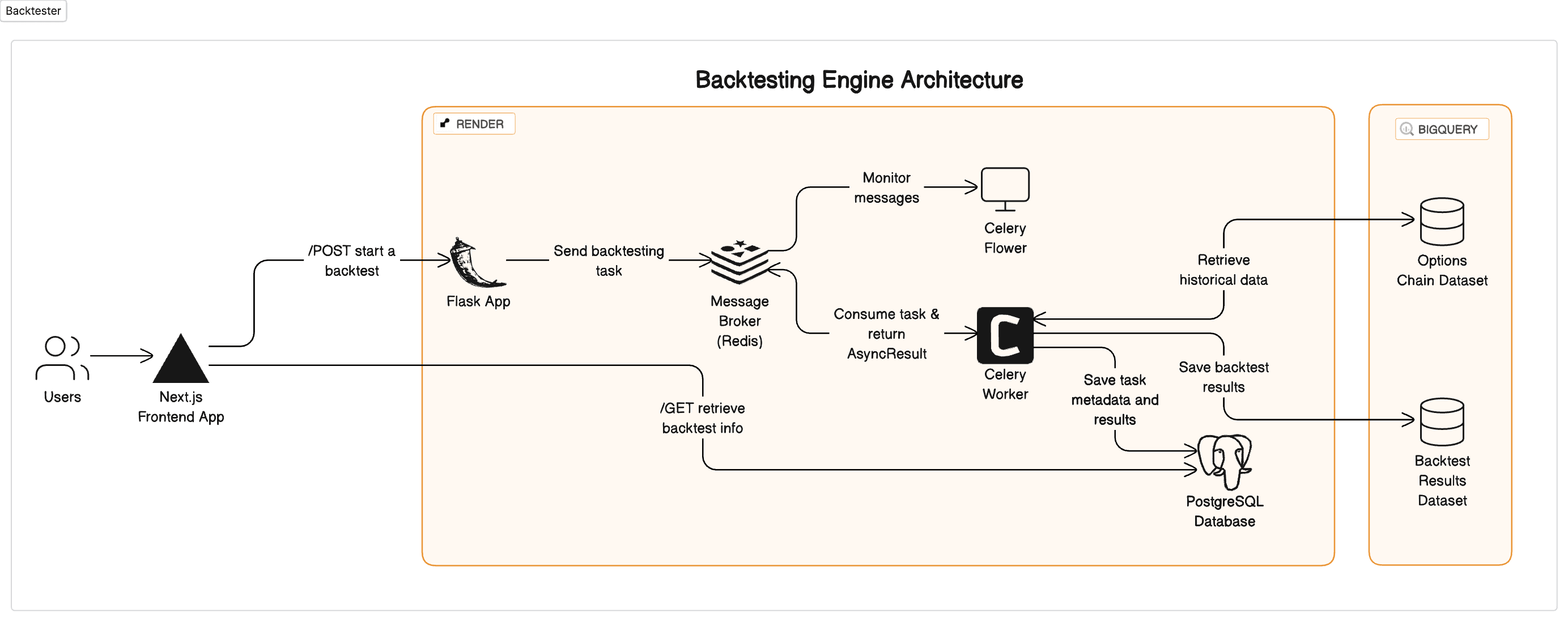

The heart of our project was to efficiently handle long-running backtesting tasks in a cloud environment. These tasks are critical for simulating trades on historical data, requiring extensive computational resources and time. Our solution needed to be robust, scalable, and efficient, ensuring that our backtesting engine could perform at its best without any hiccups.

Software Architecture

Our journey began with choosing the right cloud infrastructure. Google Cloud Platform (GCP) and BigQuery formed the backbone of our data handling capabilities, offering the scalability and performance we needed to query historical data. The real challenge, however, was managing long-running tasks efficiently.

Frontend and User Interaction

Our frontend is built with NextJS, React, TailwindCSS, Supabase and hosted as web service on Vercel. Once authenticated, users can submit backtesting requests to our simulation engine. These requests are sent to our Flask web service which handles incoming requests and backtesting tasks.

Compute Infrastructure

Flask

Flask, a lightweight and flexible Python web framework, acts as the conductor in our orchestration. It efficiently handles incoming requests and delegates tasks to Celery. This separation of concerns allows our Flask application to remain highly responsive, even when heavy backtesting tasks are being processed in the background.

Celery tasks can be defined in your Flask app by using the Celery task decorator:

from celery import Celery

app = Celery('tasks', broker=<CELERY_BROKER_URL>)

@app.task(bind=True)

def long_running_task(self, params):

...These tasks can be called from other Flask endpoints by using the deploy method:

from flask import Flask

app = Flask(__name__)

@app.route('/get_api_endpoint', methods=['GET'])

def get_api_endpoint():

...

async_result = task_function.delay(params)

...Celery Workers & Redis Message Broker

Upon receiving a backtest request, our Flask application delegates the task to a Celery worker. This worker is crucial for managing the long-running process, allowing tasks to be executed asynchronously and efficiently. We leveraged Redis as our message broker to facilitate this communication, ensuring a smooth and responsive user experience.

Continuous Monitoring with Flower

To ensure the system operates at peak efficiency, we implemented a monitoring platform called Flower. This allows us to track the progress and results from each submitted task in the queue.

Render

In our quest to streamline and optimize our cloud infrastructure, we turned to Render for its simplicity and effectiveness in managing cloud resources. Render's platform has significantly simplified the process of spinning up and managing the various components of our backtesting engine, allowing us to focus more on development and less on the complexities of infrastructure management.

YAML-based IaC

Render utilizes YAML-based Infrastructure as Code (IaC) scripts, which has been a game-changer. Unlike more complex IaC tools like Terraform, Render's approach is more straightforward and less time-consuming. With these YAML scripts, we can easily define and configure the necessary resources such as Flask applications, Celery workers, and Redis instances. This simplicity accelerates our deployment process, making it more efficient and error-free.

This is how easy it is to create a Flask web service with Render:

services:

- type: web

name: app

region: oregon

env: python

buildCommand: "pip install -r requirements.txt"

startCommand: "gunicorn app:app"

autoDeploy: true

envVars:

- key: CELERY_BROKER_URL

fromService:

name: celery-redis

type: redis

property: connectionStringConclusion

In conclusion, our approach to orchestrating long-running backtesting tasks in the cloud demonstrates the power of combining Flask, Celery, and Redis. This trio forms the backbone of our backtesting engine, allowing us to efficiently process complex financial simulations while maintaining a responsive and user-friendly interface. As we move forward, we continue to explore new ways to enhance this architecture.

Stay tuned for further updates as we delve deeper into the cloud.